Sound Cloud

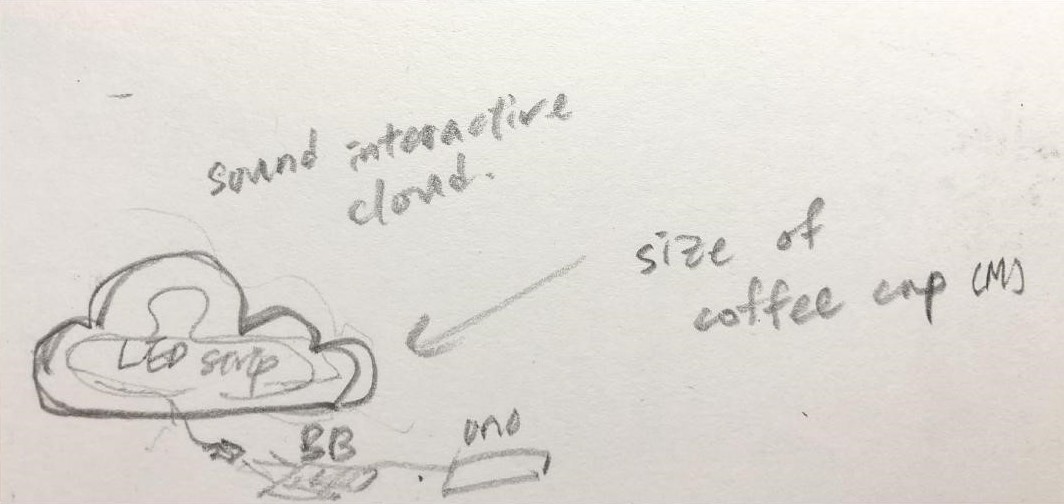

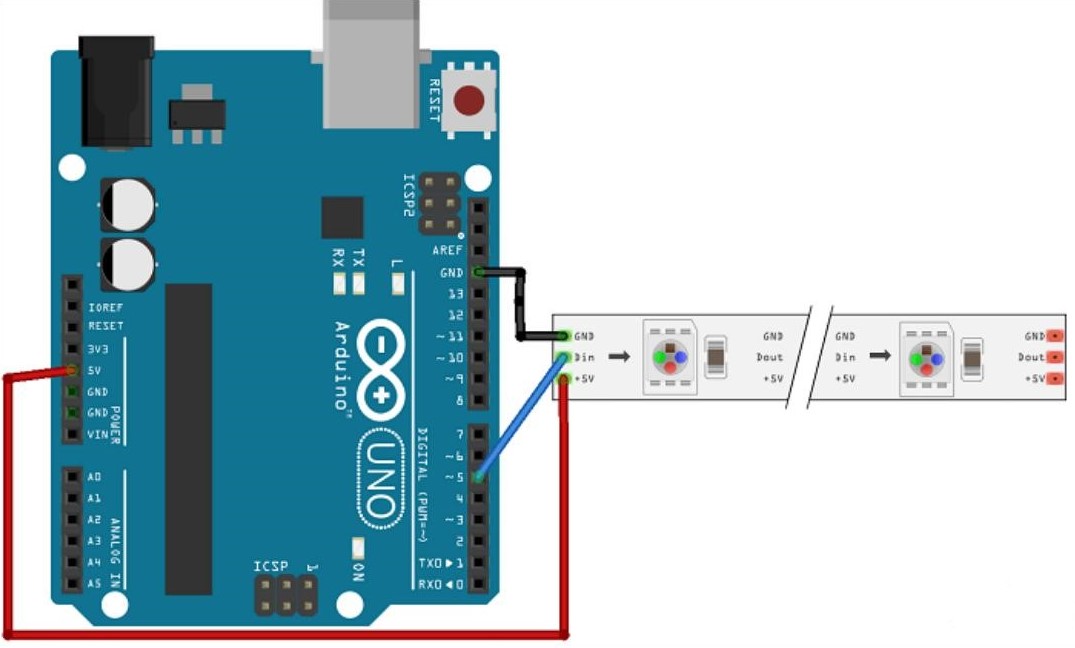

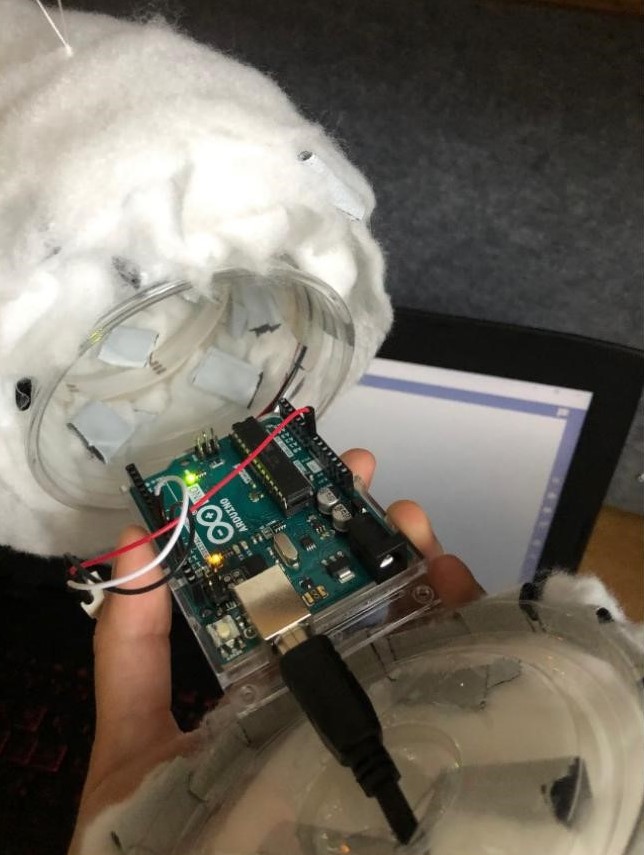

Cotton, Iced coffee cup, Arduino, LED strip

2019

Sound Cloud is an immersive multisensory experience where light and sound are connected by translating music into visual cues. Audio signals processed through a Max MSP sketch send the information to an Arduino housed in a cotton cloud. The Arduino interprets these signals and adjusts the cloud’s colors to match the music’s rhythm and intensity.

×

![]()